Exposure Analytics for Risk Managers – Analyze This!

Back in 1999, when I watched the movie Analyze This, it was rather whimsical to hear a mob boss interject technology into a serious discussion. Robert De Niro’s subordinate realized that the organization needed to change with the times and was advising his boss to modernize their way of making better informed decisions. De Niro’s character had been struggling, with his emotions and indecisiveness which ultimately prompts him to seek professional help in the movie.

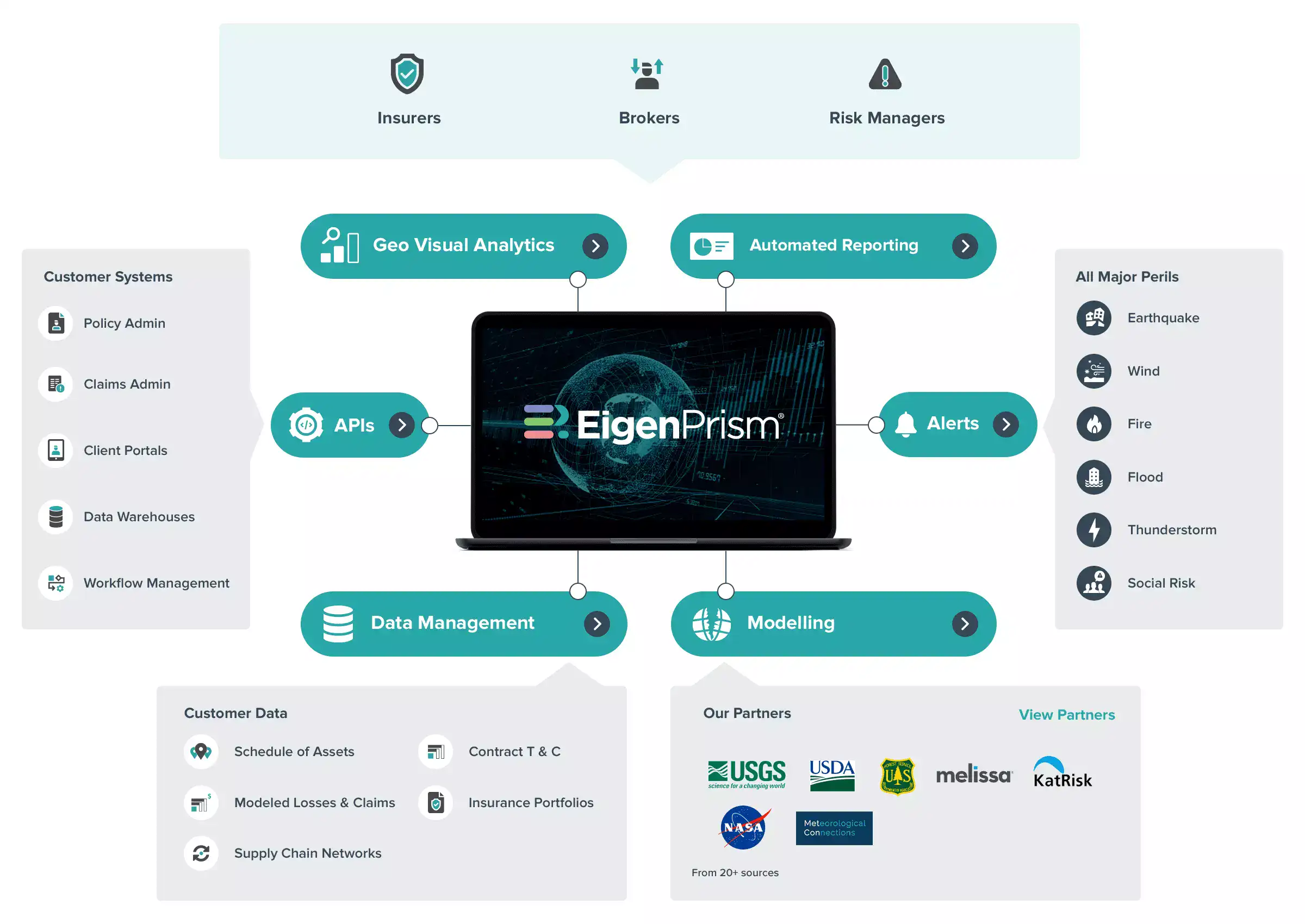

Times are beginning to change for corporate risk managers – and it is exciting. Today’s computational power coupled with the development of big data technology for risk and insurance is transforming the industry from transactional to analytics-based decision making. This will free up time, reduce cost and improve data quality that consequently improves a risk manager’s total cost of risk. The beauty of this transformation is that it is open, easy and cost effective.

Business processes for risk retention, transfer and management are increasingly becoming more data driven. Spending numerous hours collecting and analyzing exposure data and preparing/ reusing legacy statement of values worksheet, program schematics, premium allocations, and risk retention analysis are being replaced with automated platforms that will ingest, consolidate and model data.

The average cycle time for a global property renewal process is about 90 days. This involves Preparation – collecting values, analyzing, modeling, sending specs and Negotiation with different markets. Most of the process is focused on the Preparation and less the actual Negotiation.

Technology today can reduce the preparation from months to days. For instance, once the values are imported into the system, the client and broker could collaboratively pre-underwrite the risk that reduces some of the uncertainty once it arrives to the account underwriters.

The system can create stratification of values graphs that provides the underwriter the measurement of values exposed to certain cat and non-cat events – a principal driver in calculating Property premiums. Characteristics such as distance to coast line, values in flood zones, brushfire area, etc are automatically displayed using business intelligence, dashboards and mapping tools.

Further, data augmentation will improve quality. Addresses could be automatically cross checked for GPS coordinates, building characteristic such as age and condition of roof, events, claims history, etc. Further, regulatory requirements could determine whether a particular location or country is subject to admitted insurance requirements minimizing cost of non-compliance penalties and fines.

New technology is fun! This may sound like an oxymoron to some in the insurance community. The ability to test your limits and retentions as well as the NatCat model results (AAL’s) has become a lot easier and yes fun. Users are now able to superimpose hypothetical wind, quake, and explosion events using simple shapes onto their locations to estimate their net retentions at the program and location level.

To take this a step further, the move to more open modeling platforms is making complex predictive risk models more accessible to risk managers. You no longer need a PhD to run these deterministic models. Users can simply select a set of historical or potential events and apply to one’s portfolio of locations. These models have granular event characteristics such as wind-speeds or flood depth that vary by location, as well as vulnerability functions that vary by asset characteristics such as construction and occupancy. Using your policy terms and conditions, a risk manager can then estimate what the policy payout would be in different scenarios. These results are computed mathematically and specific to their particular risk profile.

So what does analytics technology mean for risk managers? It will embolden them with the power to analyze their exposure data – the basis for modeling and pricing risk. The ability to simulate different permutations of events with scientifically developed models will help to level the playing field in the insurance marketplace. It will give the risk manager and the company the confidence in selecting the appropriate retentions, limits and conditions.

Most risk managers today struggle with finding ways to harness the power of their own data. Technology is now unlocking this value in ways that previously unthinkable, resulting in better insights into an organization’s risk profile and better informed data-driven decisions..

Unlike Robert De Niro’s character, you don’t need professional help. As a risk manager that is empowered with data and analytics, you are your own boss.

Eduardo Hernandez is a co-founder and Business Development leader for EigenRisk, a risk analytics company serving the (re)insurance, intermediary and risk management community. He has 20 years of risk and insurance management experience in underwriting, placement, product development and sales.