Speed of thought risk analytics

What is speed-of-thought risk analytics?

There is a paradigm shift happening in the world of (re)insurance risk analytics. It is driven by the advent of the latest generation of high-performance computing technology that allows the processing of billions of rows of data in a matter of milliseconds.

Modeling vs Analytics

First, let us set some context. We are talking about the class of risk analytics (an example being catastrophe risk analysis) which has traditionally been accomplished in a three step workflow of data preparation, risk modeling and (actual) risk analytics.

This distinction between modeling and analytics is more than just semantics. Risk analytics tries to to draw conclusions from hypothetical scenarios of the future. This is different, for example, from claims analytics, which operates on actual claims data. Risk modeling, on the other hand, is what creates these scenarios. It involves some combination of scientific, engineering and actuarial models informed by, but not directly tied to, historical data.

When you run a risk model, you are creating hundreds of thousands of scenarios, possibly for millions of locations in a (re)insurance portfolio. This is “big data” produced by just one model run. Now add in the fact that these models are often quite complex, particularly those for natural catastrophes, and you have a particularly challenging problem to tackle. Not surprisingly then, the speed with which risk models could be run has been severely limited by technology constraints. To get around these limitations, modelers came up with clever approximations and techniques to optimize for performance, but consumers paid the price of reduced transparency and even spurious results (as anyone who has grappled with “secondary uncertainty” in catastrophe models will testify). Despite these efforts, analysis times were measured in hours, even days for larger data sets.

New Technology

With the advent of new technology and hardware over the past decade, models have certainly progressed in speed, but they have also become more complex. Overall, the time to run a given model has reduced appreciably, but not to the point where business workflows have dramatically changed. Analytics continues to take a backseat to data preparation and modeling in the data-to-decision timeline.

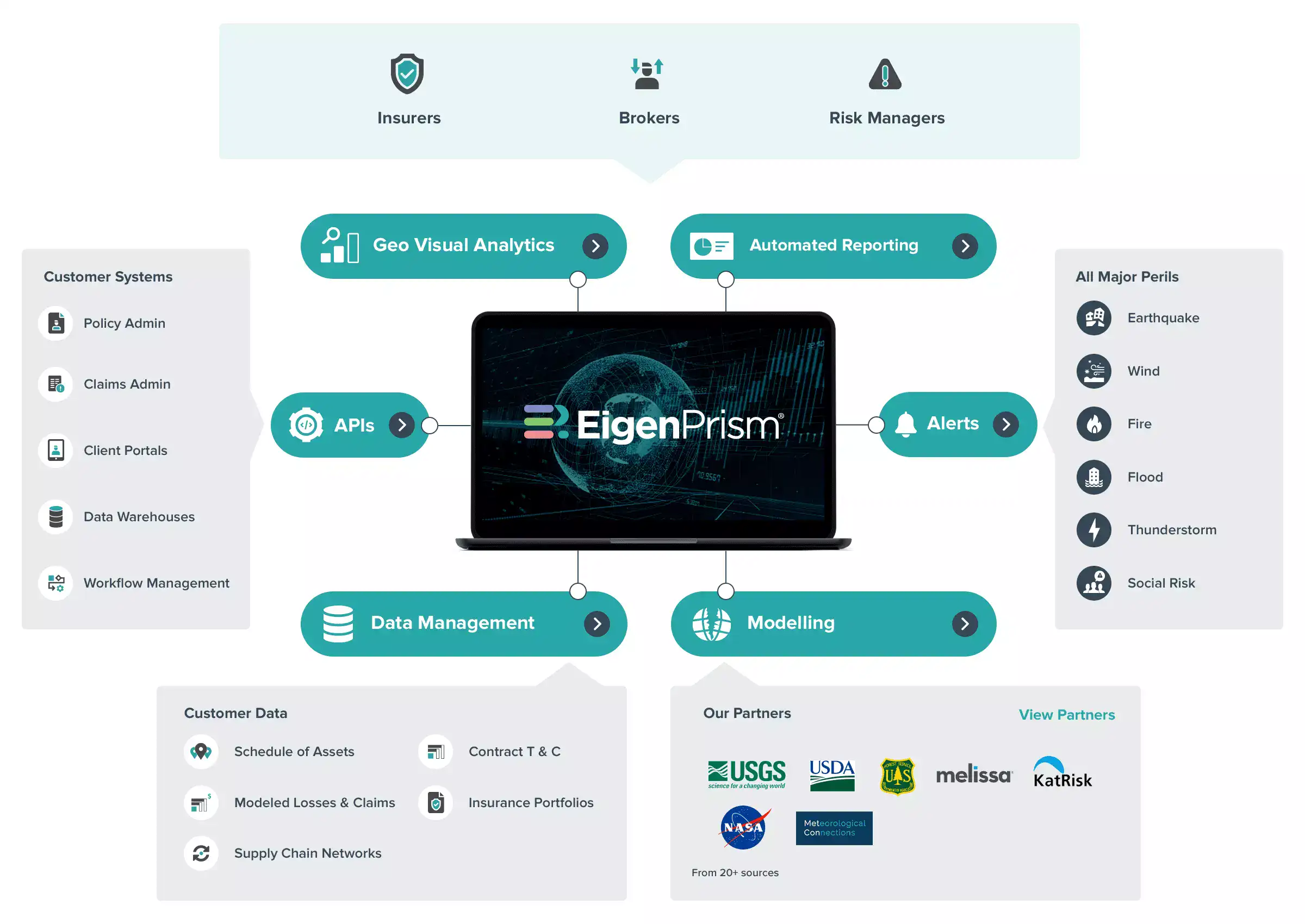

This is now about to change. It starts with the latest generation of technology (read our blog on swift data). But it also requires a radical rethinking of the way business data is stored, models are defined, analytical engines are architected, and user interfaces are designed. In this brave new world, models run and data moves at unforeseen speeds, all in the background, as users interact directly with analytical tools working on real time data sets. They are able to “ask” questions instead of writing database queries, visualize their exposure data at any level of detail on their mobile devices, scroll through results from multiple models generated at the same time, and much much more. As a result, they find it easier and faster to make better decisions.

This is speed-of-thought risk analytics, and it is arriving faster than you might think.