Bringing Real Transparency to Risk Modeling

I have found that this thinking still pervades the risk modeling community. Transparency is a word much bandied about at conferences, and is often still associated with the quality of documentation of models. More recently, there have been several new initiatives around “open modeling” and “open source models”. These are moves in the right direction, but I think they still miss the main point.

Real transparency is about the model consumer having confidence in the data and model results that drive their decisions. Whether it is an underwriter trying to “right price” a contract, or a portfolio manager assessing their unused capacity, transparency is about how easily they can answer the critical questions that arise as they are going from numbers to decisions.

Real transparency in modeling is only possible when the underlying framework has been designed from the ground-up to facilitate such exploration. You cannot simply retrofit existing models to do this (although you might be able to improve their performance). “Opening up” the same old framework to other black boxes also does not get you real transparency, it gives you more black boxes inside a larger black box. Sharing your source code as a modeler is a noble attempt at transparency, but the main beneficiaries are other modelers who can understand and modify that code, typically not the model consumer.

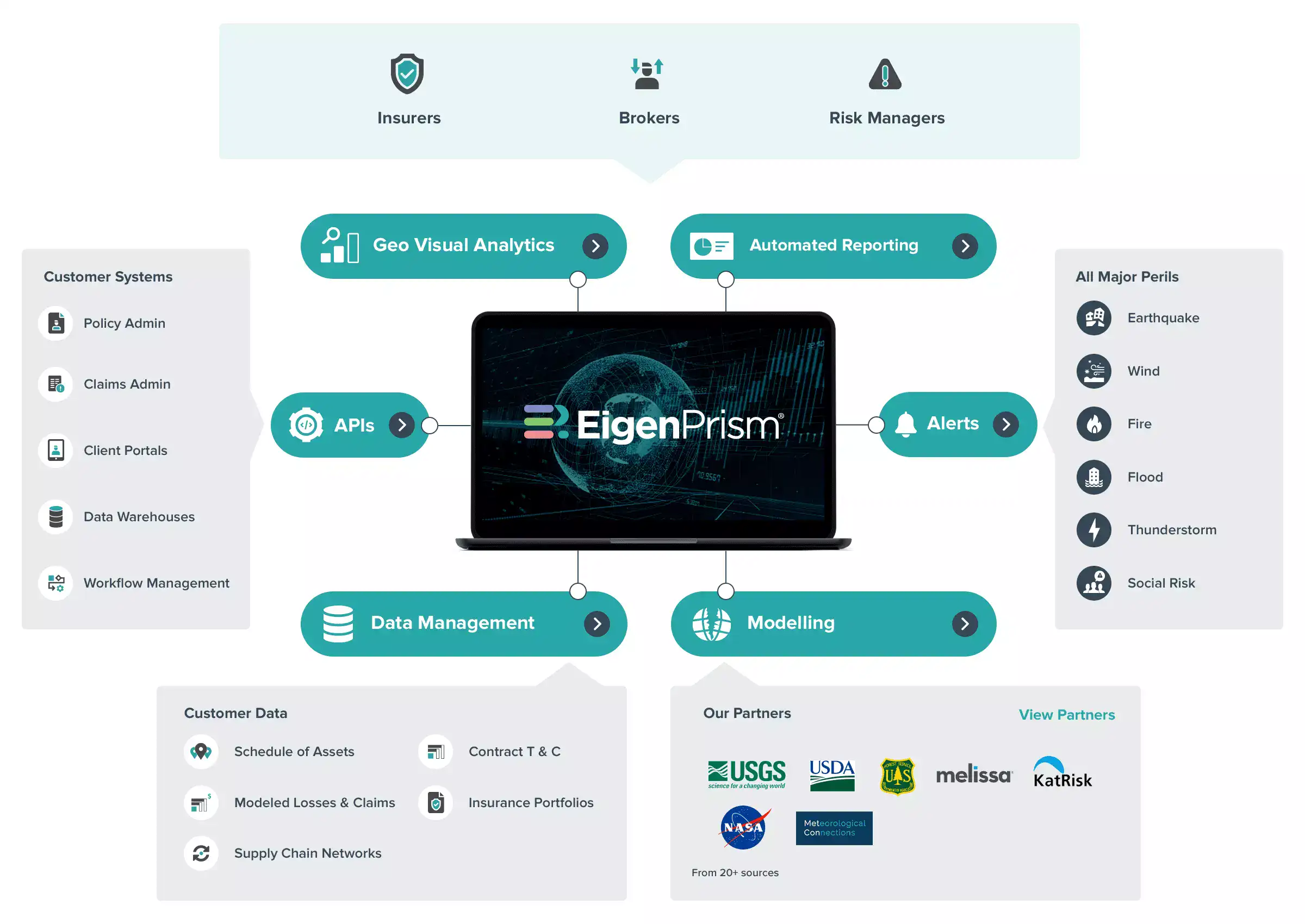

With the right technology and computational platform, however, transparency can be built into modeling from the get-go. A well designed platform provides the “plumbing” for model developers to deploy models such that they are easily deployed and highly “interrogable”. It makes it easier for them to make their science and engineering assumptions more accessible, and removes from them the burden of writing production code. The ultimate beneficiaries are model consumers- risk managers, underwriters, actuaries, analysts, portfolio managers, brokers and other risk management professionals. They are now empowered to trace model results all the way back to the source assumptions at any level of granularity, run what-if scenarios to assess the impact of those assumptions, mix and match component models to understand differences at a detailed level, and keep a clear audit trail throughout.

This is not some utopia, it is real transparency and it should be an expectation from anything claiming to be a next generation risk modeling platform.

And for the record, we have no plans to hire a transparency leader at EigenRisk!